AWS SageMaker: 7 Powerful Features You Must Know in 2024

Imagine building, training, and deploying machine learning models without wrestling with infrastructure. That’s exactly what AWS SageMaker promises—and delivers. In this deep dive, we’ll explore how SageMaker is revolutionizing ML workflows with speed, scalability, and simplicity.

What Is AWS SageMaker and Why It Matters

Amazon Web Services (AWS) SageMaker is a fully managed service that empowers data scientists and developers to build, train, and deploy machine learning (ML) models at scale. Launched in 2017, it was designed to remove the heavy lifting traditionally associated with ML development—such as managing servers, tuning algorithms, or handling deployment pipelines.

Core Purpose of AWS SageMaker

The primary goal of AWS SageMaker is to democratize machine learning by making it accessible to teams regardless of their ML expertise. Whether you’re a seasoned data scientist or a developer new to ML, SageMaker provides tools that streamline the entire lifecycle—from data preparation to real-time inference.

- Eliminates infrastructure management

- Accelerates model development cycles

- Supports both custom and pre-built algorithms

By abstracting away the complexity, AWS SageMaker allows users to focus on innovation rather than operational overhead. This shift has made it a cornerstone for enterprises adopting AI at scale.

How AWS SageMaker Fits into the ML Workflow

A typical machine learning workflow involves several stages: data labeling, preprocessing, model training, evaluation, tuning, and deployment. AWS SageMaker integrates seamlessly into each phase, offering purpose-built tools for every step.

- Data can be ingested directly from Amazon S3

- Labeling tasks can be automated using SageMaker Ground Truth

- Notebooks provide an interactive environment for experimentation

“SageMaker reduces the time to go from idea to production by up to 70%.” — AWS Case Study, 2023

This end-to-end integration ensures consistency, reproducibility, and faster time-to-market for ML applications.

Key Components of AWS SageMaker

To understand the full power of AWS SageMaker, it’s essential to explore its core components. Each module serves a specific function in the ML pipeline, and together they form a cohesive ecosystem that supports rapid development and deployment.

SageMaker Studio: The Unified Development Environment

SageMaker Studio is a web-based, visual interface that brings all your ML tools into one place. Think of it as an IDE (Integrated Development Environment) tailored for machine learning. It includes Jupyter notebooks, debugging tools, experiment tracking, and collaboration features.

- Single pane of glass for all SageMaker activities

- Real-time collaboration between team members

- Integrated version control and model lineage tracking

With SageMaker Studio, teams can track experiments, compare model performance, and reproduce results effortlessly. It’s particularly useful for organizations practicing MLOps (Machine Learning Operations).

SageMaker Notebooks: Interactive ML Development

At the heart of many ML workflows are Jupyter notebooks. AWS SageMaker provides managed notebook instances that come pre-installed with popular ML frameworks like TensorFlow, PyTorch, and Scikit-learn.

- Fully managed compute instances with auto-shutdown options

- Seamless integration with other AWS services (e.g., S3, IAM)

- Support for custom Docker containers for specialized environments

Unlike traditional setups where you manage EC2 instances manually, SageMaker notebooks handle underlying infrastructure automatically, including scaling and security configurations.

SageMaker Training and Hosting Services

One of the most powerful aspects of AWS SageMaker is its ability to train models at scale. The service supports distributed training across multiple GPUs or instances, which is crucial for deep learning models that require massive computational power.

- Built-in algorithms optimized for performance (e.g., XGBoost, Linear Learner)

- Support for custom algorithms via Docker containers

- Automatic model tuning (hyperparameter optimization)

Once trained, models can be deployed to secure endpoints for real-time predictions or batch transformations. These endpoints are auto-scaled and monitored through CloudWatch, ensuring reliability and performance.

How AWS SageMaker Simplifies Model Training

Training machine learning models is often the most resource-intensive phase of development. AWS SageMaker streamlines this process with automation, scalability, and built-in intelligence.

Built-in Algorithms for Common Use Cases

AWS SageMaker comes with a suite of built-in algorithms that cover a wide range of ML tasks, including classification, regression, clustering, and recommendation systems. These algorithms are optimized for performance and cost-efficiency on AWS infrastructure.

- XGBoost for gradient boosting

- K-Means for unsupervised clustering

- Object2Vec for natural language processing

Using these pre-built algorithms eliminates the need to write low-level code, allowing faster prototyping and deployment. For example, a fraud detection system can be built using XGBoost in hours instead of weeks.

Automatic Model Tuning (Hyperparameter Optimization)

Hyperparameter tuning is critical for achieving optimal model performance, but it’s often time-consuming and complex. AWS SageMaker automates this process using Bayesian optimization to find the best combination of parameters.

- Define a range of hyperparameters to test

- SageMaker runs multiple training jobs in parallel

- Selects the best-performing model based on evaluation metrics

This feature significantly reduces manual trial-and-error, improving accuracy while saving time and compute costs. According to AWS, automatic model tuning can improve model performance by up to 20% compared to manual tuning.

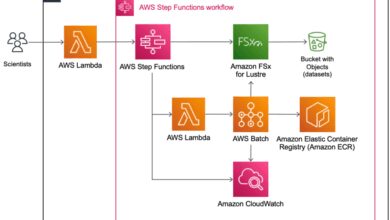

Distributed Training for Large-Scale Models

For deep learning models with billions of parameters, single-machine training is impractical. AWS SageMaker supports distributed training across multiple instances using frameworks like Horovod and native support for TensorFlow and PyTorch.

- Parameter Server and Data Parallel strategies supported

- Automatic sharding of datasets across workers

- Integrated with FSx for Lustre for high-speed file access

This capability enables enterprises to train state-of-the-art models like BERT or ResNet in a fraction of the time it would take on-premises.

Deploying Models with AWS SageMaker

Building a model is only half the battle; deploying it reliably and efficiently is equally important. AWS SageMaker excels in model deployment by offering flexible, scalable, and secure hosting options.

Real-Time Inference with SageMaker Endpoints

SageMaker allows you to deploy models as RESTful API endpoints that can serve real-time predictions with low latency. These endpoints are backed by auto-scaled EC2 instances and can handle thousands of requests per second.

- Support for A/B testing between model versions

- Can integrate with API Gateway and Lambda for serverless architectures

- End-to-end encryption and VPC integration for security

For example, a recommendation engine used by an e-commerce platform can be deployed as a SageMaker endpoint, delivering personalized product suggestions in under 100ms.

Batch Transform for Offline Predictions

Not all use cases require real-time responses. For scenarios like generating daily reports or processing large historical datasets, SageMaker offers Batch Transform—a feature that applies your model to entire datasets stored in Amazon S3.

- No need to provision or manage servers

- Processes data in parallel for faster throughput

- Output automatically saved back to S3

This is ideal for financial risk modeling, customer segmentation, or any batch-oriented analytics task.

Multi-Model Endpoints for Cost Efficiency

In production environments, hosting dozens or hundreds of models individually can become expensive and inefficient. AWS SageMaker introduces Multi-Model Endpoints (MMEs), which allow a single endpoint to serve multiple models dynamically.

- Models are loaded on-demand from S3

- Reduces memory footprint and operational costs

- Ideal for tenant-isolated ML services in SaaS platforms

MMEs are particularly beneficial for companies offering AI-as-a-Service, where each customer may have a unique model but shared infrastructure.

Monitoring and Managing Models in Production

Once models are deployed, ongoing monitoring is crucial to ensure they perform as expected. AWS SageMaker provides robust tools for observability, drift detection, and model governance.

SageMaker Model Monitor: Detecting Data Drift

Data drift—when the statistical properties of input data change over time—is a common cause of model degradation. SageMaker Model Monitor automatically tracks features and predictions, alerting you when anomalies occur.

- Creates baselines from training data

- Compares live traffic against baseline statistics

- Generates CloudWatch alarms and SNS notifications

For instance, if a credit scoring model starts receiving applications with significantly higher income levels than during training, Model Monitor flags this shift, prompting retraining.

SageMaker Debugger for Training Insights

During training, it’s often difficult to know why a model isn’t converging or is overfitting. SageMaker Debugger captures tensors, gradients, and system metrics in real time, enabling deep inspection of the training process.

- Visualize loss curves and weight distributions

- Detect vanishing gradients or exploding activations

- Set up rules to automatically stop failed training jobs

This level of transparency helps data scientists debug issues faster and improve model quality.

Audit and Compliance with SageMaker Lineage Tracking

In regulated industries like healthcare or finance, being able to trace how a model was built is mandatory. SageMaker automatically tracks model lineage—capturing data sources, training jobs, parameters, and deployment history.

- Full audit trail for every model version

- Integration with AWS CloudTrail for security logging

- Support for export to compliance reporting systems

This ensures adherence to standards like GDPR, HIPAA, or SOC 2, giving organizations confidence in their ML governance.

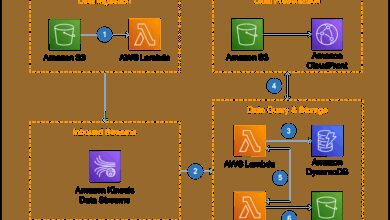

Integrating AWS SageMaker with Other AWS Services

AWS SageMaker doesn’t operate in isolation. Its true power emerges when integrated with other AWS services, creating a seamless data-to-insights pipeline.

Connecting SageMaker with Amazon S3 and Glue

Amazon S3 acts as the primary data lake for most SageMaker workflows. Data scientists can directly read from and write to S3 buckets without moving data. AWS Glue complements this by providing ETL (Extract, Transform, Load) capabilities to clean and structure raw data before training.

- S3 provides durable, scalable storage for datasets

- Glue crawlers discover data schema and generate ETL scripts

- Data Catalog makes datasets discoverable across teams

This integration enables automated data pipelines where new data triggers retraining jobs via EventBridge and Lambda.

Using SageMaker with Lambda and API Gateway

For serverless ML applications, SageMaker can be invoked via AWS Lambda functions. This setup is perfect for event-driven architectures, such as processing images uploaded to S3 or analyzing logs in real time.

- API Gateway exposes SageMaker endpoints as REST APIs

- Lambda acts as a lightweight proxy or preprocessor

- Enables microservices-based ML architectures

An example use case is a chatbot that uses a SageMaker-hosted NLP model to interpret user intent, triggered by a webhook from a messaging platform.

SageMaker and IAM: Securing Access Control

Security is paramount when dealing with sensitive data and models. AWS Identity and Access Management (IAM) allows fine-grained control over who can access SageMaker resources.

- Role-based access to notebooks, training jobs, and endpoints

- Integration with AWS Single Sign-On (SSO)

- Encryption at rest and in transit using KMS keys

Organizations can enforce least-privilege principles, ensuring that only authorized personnel can modify or deploy models.

Real-World Use Cases of AWS SageMaker

The versatility of AWS SageMaker makes it suitable for a wide array of industries and applications. From healthcare to retail, companies are leveraging SageMaker to drive innovation and efficiency.

Fraud Detection in Financial Services

Banks and fintech companies use AWS SageMaker to detect fraudulent transactions in real time. By training models on historical transaction data, they can identify suspicious patterns and flag them instantly.

- Uses anomaly detection algorithms like Random Cut Forest

- Processes millions of transactions daily

- Integrates with fraud management systems via APIs

One major European bank reduced false positives by 35% after migrating to SageMaker, improving customer experience while maintaining security.

Personalized Recommendations in E-Commerce

Leading e-commerce platforms use SageMaker to power recommendation engines that suggest products based on user behavior, purchase history, and browsing patterns.

- Leverages factorization machines and deep learning models

- Updates recommendations in near real-time

- Scales to handle peak traffic during sales events

A global retailer reported a 22% increase in conversion rates after implementing a SageMaker-powered recommendation system.

Predictive Maintenance in Manufacturing

Manufacturers use sensor data from equipment to predict failures before they happen. AWS SageMaker analyzes time-series data to forecast maintenance needs, reducing downtime and repair costs.

- Ingests data from IoT devices via AWS IoT Core

- Trains LSTM models for sequence prediction

- Triggers alerts via Amazon SNS or integrates with CMMS systems

A Fortune 500 industrial company cut unplanned downtime by 40% using SageMaker-driven predictive analytics.

What is AWS SageMaker used for?

AWS SageMaker is used to build, train, and deploy machine learning models at scale. It provides a fully managed environment that simplifies every stage of the ML lifecycle, from data preparation to real-time inference, making it ideal for both beginners and advanced practitioners.

Is AWS SageMaker free to use?

AWS SageMaker offers a free tier with limited usage of notebooks, training, and hosting. However, most production workloads incur costs based on compute, storage, and data transfer. Pricing is pay-as-you-go, with no upfront fees.

Can I use PyTorch and TensorFlow on AWS SageMaker?

Yes, AWS SageMaker natively supports popular deep learning frameworks like PyTorch and TensorFlow. You can use pre-built containers or bring your own custom Docker images to run models in your preferred environment.

How does SageMaker handle model security?

SageMaker integrates with AWS IAM for access control, supports VPC isolation, and encrypts data at rest and in transit using AWS KMS. It also allows private endpoints to prevent public internet exposure.

Does SageMaker support MLOps practices?

Yes, SageMaker includes native support for MLOps through features like experiment tracking, model registry, automated pipelines (SageMaker Pipelines), and CI/CD integration, enabling reproducible and scalable ML workflows.

AWS SageMaker has redefined how organizations approach machine learning by removing infrastructure barriers and providing a comprehensive suite of tools for the entire ML lifecycle. From interactive notebooks to automated model tuning, real-time inference, and robust monitoring, it empowers teams to innovate faster and deploy with confidence. Whether you’re building a simple classifier or a complex deep learning system, SageMaker offers the scalability, security, and integration needed to succeed in today’s AI-driven world. As machine learning becomes increasingly central to digital transformation, AWS SageMaker stands out as a powerful, flexible, and enterprise-ready platform.

Recommended for you 👇

Further Reading: