AWS Glue: 7 Powerful Features You Must Know in 2024

Ever felt overwhelmed by messy data scattered across systems? AWS Glue is your ultimate ally—automating ETL processes so you can focus on insights, not infrastructure. Let’s dive into how this serverless powerhouse transforms data workflows.

What Is AWS Glue and Why It Matters

AWS Glue is a fully managed, serverless data integration service that simplifies the process of preparing and loading data for analytics. Built by Amazon Web Services, it automates the heavy lifting of data discovery, transformation, and job orchestration. Whether you’re dealing with structured, semi-structured, or unstructured data, AWS Glue streamlines ETL (Extract, Transform, Load) workflows without requiring you to manage servers.

Core Components of AWS Glue

AWS Glue isn’t just a single tool—it’s an ecosystem of interconnected services working in harmony. The main components include the Data Catalog, Crawlers, ETL Jobs, and Triggers. Each plays a vital role in automating data pipelines.

Data Catalog: Acts as a persistent metadata repository, storing table definitions, schema versions, and partition information.Crawlers: Scan your data stores (like S3, RDS, Redshift) to infer schemas and populate the Data Catalog automatically.ETL Jobs: Define the logic for transforming and moving data, written in Python or Scala, and executed in a serverless Spark environment.Triggers: Control when jobs run—on schedule, on demand, or in response to events.

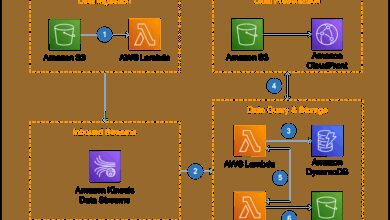

.How AWS Glue Fits Into Modern Data Architecture

In today’s data-driven world, organizations collect data from diverse sources—databases, logs, IoT devices, and more.AWS Glue acts as the central nervous system of a modern data lake architecture.It connects siloed data sources, transforms them into usable formats, and loads them into analytical systems like Amazon Redshift, Athena, or SageMaker..

For example, a retail company might use AWS Glue to consolidate customer transaction data from on-premises databases, e-commerce platforms, and mobile apps into a unified data lake on S3. Once cleaned and transformed, this data fuels business intelligence dashboards and machine learning models.

“AWS Glue reduces the time to build ETL pipelines from weeks to hours.” — AWS Customer Case Study, 2023

AWS Glue Data Catalog: The Heart of Metadata Management

The AWS Glue Data Catalog is more than just a schema registry—it’s a centralized metadata management system that enables seamless data discovery and governance. Think of it as a digital library for your data assets, where every table, partition, and column is cataloged and searchable.

Automated Schema Discovery with Crawlers

One of the standout features of AWS Glue is its ability to automatically infer schemas using Crawlers. You point a crawler at a data source—say, a folder in Amazon S3 containing JSON files—and it scans the data, determines the structure, and creates a table definition in the Data Catalog.

Crawlers support a wide range of formats: CSV, JSON, Parquet, ORC, Avro, and even custom formats. They can also detect schema changes over time and update the catalog accordingly, ensuring your metadata stays current.

- Crawlers can be scheduled to run hourly, daily, or on-demand.

- They integrate with AWS Lake Formation for fine-grained access control.

- Support for custom classifiers allows parsing of non-standard data formats.

Integration with Other AWS Services

The Data Catalog isn’t isolated—it’s designed to work seamlessly with other AWS analytics services. Amazon Athena, for instance, uses the Glue Data Catalog to query data directly from S3. Similarly, Amazon Redshift Spectrum and EMR can access table definitions stored in Glue, eliminating the need for redundant schema management.

This interoperability reduces complexity and ensures consistency across your data ecosystem. Instead of maintaining separate metadata stores for each service, you have a single source of truth.

Building ETL Pipelines with AWS Glue Jobs

At the core of AWS Glue’s functionality are ETL jobs—automated workflows that extract data from sources, apply transformations, and load it into targets. These jobs run in a fully managed Apache Spark environment, freeing you from cluster management.

Creating and Configuring ETL Jobs

You can create ETL jobs using the AWS Management Console, CLI, or SDKs. The console provides a visual interface where you can select source and target data stores, choose transformations, and configure job properties like memory allocation and timeout settings.

When you create a job, AWS Glue automatically generates Python or Scala code using its PySpark or SparkSQL framework. You can then customize this script to implement complex logic—filtering, joining, aggregating, or even calling external APIs.

- Jobs can be triggered manually, on a schedule, or via event-based rules (e.g., new file arrival in S3).

- You can specify job bookmarks to track processed data and avoid reprocessing.

- Support for incremental data processing enhances efficiency.

Using AWS Glue Studio for Visual Development

For users who prefer a drag-and-drop interface, AWS Glue Studio offers a visual ETL editor. You can build pipelines by connecting nodes representing sources, transformations, and sinks. This low-code approach lowers the barrier to entry for non-developers while still allowing advanced customization through script editing.

Glue Studio supports both batch and streaming ETL jobs, making it versatile for different use cases. For example, you could build a real-time pipeline that processes clickstream data from Kinesis and loads it into a data warehouse every minute.

Serverless Architecture and Cost Efficiency

One of the biggest advantages of AWS Glue is its serverless nature. Unlike traditional ETL tools that require provisioning and managing clusters, AWS Glue abstracts away infrastructure management. You simply define your job, and AWS handles the rest—scaling resources up or down based on workload demands.

How Serverless Reduces Operational Overhead

With AWS Glue, there’s no need to worry about patching servers, monitoring cluster health, or tuning Spark configurations. The service automatically provisions the necessary compute resources (called Data Processing Units or DPUs) and shuts them down when the job completes.

This means you only pay for the time your job runs, not for idle resources. For intermittent or unpredictable workloads, this can lead to significant cost savings compared to maintaining always-on clusters.

Pricing Model and Cost Optimization Tips

AWS Glue pricing is based on DPU-hours—the amount of processing power used per hour. One DPU provides 4 vCPUs and 16 GB of memory. You’re charged for the duration your job runs multiplied by the number of DPUs allocated.

To optimize costs:

- Use job bookmarks to avoid reprocessing the same data.

- Enable continuous logging only when debugging.

- Leverage partitioning and columnar formats (like Parquet) to reduce I/O.

- Monitor job metrics in CloudWatch to identify performance bottlenecks.

For more details on pricing, visit the official AWS Glue pricing page.

Security and Compliance in AWS Glue

Data security is non-negotiable, especially when handling sensitive information. AWS Glue provides robust security features to protect your data at rest and in transit, ensuring compliance with industry standards like GDPR, HIPAA, and SOC 2.

Encryption and Access Control

AWS Glue integrates with AWS Key Management Service (KMS) to encrypt data at rest. You can enable encryption for job scripts, temporary directories, and output data. All data in transit is automatically encrypted using TLS.

Access to AWS Glue resources is controlled through AWS Identity and Access Management (IAM). You can define granular permissions—for example, allowing a data engineer to create jobs but not delete the Data Catalog.

Audit Logging and Monitoring

All AWS Glue API calls are logged in AWS CloudTrail, providing a complete audit trail of who did what and when. You can also integrate with Amazon CloudWatch to monitor job execution, set alarms for failures, and track metrics like DPU usage and runtime duration.

For organizations using AWS Lake Formation, you can centrally manage data access policies across Glue, Athena, and Redshift, ensuring consistent governance.

Advanced Features: AWS Glue for Streaming and Machine Learning

While AWS Glue started as a batch ETL tool, it has evolved to support real-time data processing and machine learning workflows. These advanced capabilities make it a versatile platform for modern data engineering needs.

Streaming ETL with AWS Glue

AWS Glue supports streaming ETL jobs that process data from Amazon Kinesis and Apache Kafka (via MSK). Instead of waiting for data to accumulate in batches, you can ingest and transform records in near real-time.

Streaming jobs run continuously, processing data as it arrives. This is ideal for use cases like fraud detection, real-time personalization, or monitoring application logs. Glue automatically manages checkpointing and state storage, ensuring exactly-once processing semantics.

Integration with AWS Machine Learning Services

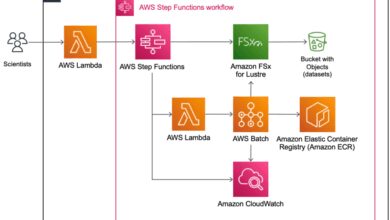

AWS Glue plays a crucial role in preparing data for machine learning. Before training a model in Amazon SageMaker, you often need to clean, normalize, and feature-engineer your dataset. AWS Glue can automate this preprocessing step, ensuring high-quality input for your ML pipelines.

You can also use Glue to backfill historical data into feature stores or generate training datasets on demand. The integration with SageMaker Pipelines allows you to orchestrate end-to-end ML workflows—from data ingestion to model deployment.

Best Practices for Optimizing AWS Glue Performance

To get the most out of AWS Glue, it’s important to follow best practices for performance, reliability, and maintainability. These guidelines help you build efficient, scalable, and cost-effective data pipelines.

Data Partitioning and Compression

Partitioning your data by date, region, or category can dramatically improve query performance and reduce costs. AWS Glue Crawlers automatically detect partitions in S3 paths (e.g., s3://bucket/year=2024/month=04/) and register them in the Data Catalog.

Using columnar formats like Parquet or ORC with compression (e.g., Snappy, GZIP) reduces file size and speeds up I/O operations. Glue can read and write these formats natively, making them ideal for large-scale analytics.

Error Handling and Retry Mechanisms

Even the best pipelines encounter errors—network timeouts, schema mismatches, or transient failures. AWS Glue provides built-in retry logic and error logging to help you handle these gracefully.

- Set up CloudWatch alarms to notify you of job failures.

- Use try-catch blocks in your ETL scripts for custom error handling.

- Enable job bookmarks to resume from the last successful run.

Additionally, you can route failed records to a dead-letter queue (DLQ) for later analysis, ensuring no data is lost.

Real-World Use Cases of AWS Glue

AWS Glue isn’t just a theoretical tool—it’s being used by companies across industries to solve real business problems. From financial services to healthcare, its flexibility and scalability make it a go-to solution for data integration.

Data Lake Ingestion and Preparation

Many organizations use AWS Glue to build and maintain data lakes on Amazon S3. For example, a media company might ingest terabytes of video metadata, user engagement logs, and ad impressions daily. Glue crawlers discover the schema, jobs clean and enrich the data, and the output is stored in a structured format for analysis with Athena or QuickSight.

Cloud Migration and Legacy System Integration

When migrating from on-premises databases to the cloud, AWS Glue simplifies the ETL process. A manufacturing firm, for instance, might use Glue to extract data from an old Oracle database, transform it to fit a modern data model, and load it into Amazon Redshift for reporting.

The ability to connect to JDBC sources and support for custom scripts makes Glue ideal for handling complex legacy data structures.

What is AWS Glue used for?

AWS Glue is primarily used for automating ETL (Extract, Transform, Load) processes. It helps discover, clean, transform, and move data between various sources and targets, making it ready for analytics, machine learning, or storage in a data lake.

Is AWS Glue serverless?

Yes, AWS Glue is a fully serverless service. It automatically provisions and scales the necessary compute resources (DPUs) to run your ETL jobs, and you only pay for the time your jobs are running.

How does AWS Glue compare to Apache Airflow?

While both can orchestrate workflows, AWS Glue is specialized for ETL with built-in data cataloging and transformation capabilities. Apache Airflow is a general-purpose workflow orchestrator that requires more setup but offers greater flexibility. Glue is easier to use for pure ETL, while Airflow suits complex, multi-step pipelines involving non-ETL tasks.

Can AWS Glue handle real-time data?

Yes, AWS Glue supports streaming ETL jobs that process data from Amazon Kinesis and MSK (Managed Streaming for Kafka) in near real-time, enabling use cases like live dashboards and anomaly detection.

Does AWS Glue support Python?

Absolutely. AWS Glue allows you to write ETL scripts in Python (PySpark) or Scala. It also provides a Python shell job type for lightweight tasks that don’t require Spark, such as metadata cleanup or API calls.

AWS Glue has evolved into a comprehensive data integration platform that empowers organizations to unlock the value of their data. From automated schema discovery to real-time streaming and machine learning integration, it offers a rich set of features designed for the modern data stack. By eliminating infrastructure management, enforcing security, and enabling rapid development, AWS Glue allows data engineers and analysts to focus on what matters most—deriving insights. Whether you’re building a data lake, migrating systems, or powering AI initiatives, AWS Glue provides the tools to do it efficiently and at scale.

Recommended for you 👇

Further Reading: