AWS CLI Mastery: 7 Powerful Tips to Supercharge Your Workflow

Unlock the full potential of AWS with the AWS CLI—a game-changing tool that puts the power of Amazon’s cloud at your fingertips. Whether you’re automating tasks or managing infrastructure, mastering the AWS CLI is essential for any cloud professional.

What Is AWS CLI and Why It’s a Game-Changer

The AWS Command Line Interface (CLI) is a unified tool that allows developers, system administrators, and DevOps engineers to interact with Amazon Web Services through commands in a terminal or script. Instead of navigating the AWS Management Console with a mouse, you can control multiple AWS services directly from your command line—faster, more efficiently, and with full automation potential.

Developed and maintained by Amazon, the AWS CLI supports a vast range of AWS services, including EC2, S3, Lambda, IAM, CloudFormation, and more. It’s available for Windows, macOS, and Linux, making it a cross-platform powerhouse for cloud management. With the AWS CLI, you can launch instances, manage buckets, configure security policies, and deploy applications—all without leaving your terminal.

Core Features of AWS CLI

The AWS CLI is packed with features that make it indispensable for cloud operations. One of its most powerful aspects is its ability to automate repetitive tasks. Instead of clicking through the AWS console every time you need to back up a database or scale a service, you can write a script that does it automatically.

- Service Integration: The CLI supports over 200 AWS services, enabling you to manage everything from storage to machine learning with a single tool.

- Scriptable and Automatable: Commands can be chained together in shell scripts, allowing for complex workflows and scheduled operations via cron jobs or CI/CD pipelines.

- Output Formatting: You can choose how the CLI returns data—JSON, text, or table format—making it easier to parse results in automation scripts.

This level of control is why the AWS CLI is considered a must-have tool for anyone serious about AWS.

How AWS CLI Compares to the AWS Console

While the AWS Management Console provides a user-friendly graphical interface, the AWS CLI offers precision, speed, and scalability. For example, launching a single EC2 instance via the console might take a few clicks and some waiting. But with the AWS CLI, you can launch 10 instances in seconds using a single command.

Moreover, the CLI is ideal for infrastructure-as-code (IaC) practices. While the console is great for exploration and one-off tasks, the CLI integrates seamlessly with tools like Terraform, Ansible, and Jenkins. This makes it a cornerstone of modern DevOps workflows.

“The AWS CLI is the bridge between manual operations and full automation.” — AWS Certified DevOps Engineer

Installing and Configuring AWS CLI

Before you can harness the power of the AWS CLI, you need to install and configure it properly. The process is straightforward, but attention to detail is crucial—especially when setting up authentication credentials.

The AWS CLI comes in two versions: v1 and v2. AWS recommends using version 2, which includes several improvements like better auto-suggestions, built-in support for SSO (Single Sign-On), and enhanced installation methods. Version 1 is still supported but lacks some of the modern features found in v2.

Step-by-Step Installation Guide

Installing the AWS CLI varies slightly depending on your operating system. Below are the recommended methods for each platform:

- macOS: Use Homebrew with the command

brew install awscli. Alternatively, download the official installer from the AWS CLI official website. - Windows: Download the MSI installer from AWS’s site or use Chocolatey with

choco install awscli. - Linux: On most distributions, you can use pip:

pip install awscli --upgrade --user. For Amazon Linux 2, it’s pre-installed; just update it withsudo yum install aws-cli.

After installation, verify it works by running aws --version in your terminal. You should see output showing the version number, Python version, and OS.

Configuring AWS CLI with IAM Credentials

Once installed, the next step is configuration. Run aws configure to set up your credentials. You’ll be prompted for:

- AWS Access Key ID

- AWS Secret Access Key

- Default region name (e.g., us-east-1)

- Default output format (json, text, or table)

These credentials are tied to an IAM (Identity and Access Management) user. For security, always use IAM users with the principle of least privilege—never use your root account credentials.

You can generate access keys in the IAM console under ‘Security credentials’. After entering them, the AWS CLI stores them in ~/.aws/credentials, encrypted and ready for use.

Essential AWS CLI Commands for Daily Use

Once you’re set up, it’s time to start using the AWS CLI. There’s a vast library of commands, but a few are fundamental for everyday cloud management. Mastering these will save you hours and reduce human error.

Each AWS service has its own command namespace. For example, aws s3 handles Amazon S3 operations, while aws ec2 manages EC2 instances. Commands follow a consistent pattern: aws [service] [action] [options].

Managing EC2 Instances with AWS CLI

Amazon EC2 is one of the most widely used AWS services. With the AWS CLI, you can launch, stop, terminate, and monitor instances programmatically.

To launch an EC2 instance, use:

aws ec2 run-instances --image-id ami-0abcdef1234567890 --instance-type t2.micro --key-name MyKeyPair --security-group-ids sg-903004f8 --subnet-id subnet-6e7f829eThis command launches a t2.micro instance using a specific AMI, key pair, and network settings. You can customize it further with user data scripts or IAM roles.

To stop an instance:

aws ec2 stop-instances --instance-ids i-1234567890abcdef0To terminate it:

aws ec2 terminate-instances --instance-ids i-1234567890abcdef0You can also list all running instances:

aws ec2 describe-instances --filters "Name=instance-state-name,Values=running"This returns detailed JSON output, which you can filter using tools like jq or the CLI’s built-in query parameter.

Working with S3 Buckets and Objects

Amazon S3 is the backbone of cloud storage. The AWS CLI makes it easy to create buckets, upload files, and manage permissions.

To create a new S3 bucket:

aws s3 mb s3://my-unique-bucket-nameTo upload a file:

aws s3 cp local-file.txt s3://my-unique-bucket-name/To sync an entire folder:

aws s3 sync ./my-folder s3://my-unique-bucket-name/my-folderThe sync command is especially powerful—it only transfers files that have changed, making it ideal for backups and deployments.

You can also set bucket policies, enable versioning, or make files public:

aws s3 website s3://my-unique-bucket-name --index-document index.htmlThis command configures the bucket for static website hosting.

Advanced AWS CLI Techniques for Power Users

Once you’re comfortable with the basics, it’s time to level up. The AWS CLI offers advanced features that unlock deeper automation, better security, and more efficient workflows.

These techniques are used by DevOps engineers and cloud architects to manage large-scale environments with precision and repeatability.

Using Query Parameters and Filters

One of the most powerful features of the AWS CLI is the --query parameter, which allows you to filter and extract specific data from JSON responses using JMESPath expressions.

For example, to get only the instance IDs of running EC2 instances:

aws ec2 describe-instances --filters "Name=instance-state-name,Values=running" --query "Reservations[*].Instances[*].InstanceId" --output tableThis returns a clean table with just the instance IDs, making it easier to parse in scripts.

You can also combine filters and queries to find specific resources. For instance, to list all S3 buckets created in the last 30 days, you’d need to use a combination of AWS CLI and external scripting (like Python or bash), since the CLI doesn’t natively support date-based filtering for buckets.

Scripting and Automation with AWS CLI

The real power of the AWS CLI shines in automation. You can write shell scripts that perform complex sequences of actions—like deploying an application, scaling resources, and sending notifications.

For example, here’s a simple bash script that backs up a folder to S3 daily:

#!/bin/bash

BUCKET="s3://my-backup-bucket"

FOLDER="/home/user/data"

TIMESTAMP=$(date +%Y%m%d-%H%M%S)

aws s3 sync $FOLDER $BUCKET/$TIMESTAMP

aws s3 ls $BUCKETSchedule this with cron to run automatically:

0 2 * * * /home/user/backup.shThis runs the script every day at 2 AM.

In CI/CD pipelines, the AWS CLI is often used to deploy Lambda functions, update ECS services, or invalidate CloudFront caches. For example:

aws cloudfront create-invalidation --distribution-id E1234567890 --paths "/index.html"This invalidates the cache for a specific file, ensuring users get the latest version after a deployment.

Security Best Practices for AWS CLI

With great power comes great responsibility. The AWS CLI gives you deep access to your cloud environment, so security must be a top priority.

Misconfigured credentials or poorly written scripts can lead to data leaks, unauthorized access, or accidental deletions. Follow these best practices to keep your AWS environment secure.

Managing IAM Roles and Policies

Always use IAM roles and policies to limit what the AWS CLI can do. Instead of giving full admin access, create custom policies that grant only the permissions needed for a specific task.

For example, if a script only needs to upload files to S3, attach a policy like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::my-bucket",

"arn:aws:s3:::my-bucket/*"

]

}

]

}This ensures that even if the credentials are compromised, the attacker can’t delete buckets or access other services.

Using Temporary Credentials and AWS SSO

Instead of long-term access keys, consider using temporary credentials via AWS Security Token Service (STS) or AWS Single Sign-On (SSO).

AWS CLI v2 supports SSO natively. You can log in once using aws sso login, and the CLI will automatically refresh your credentials. This is especially useful in enterprise environments where users need to switch between multiple AWS accounts.

Temporary credentials reduce the risk of key exposure and are automatically rotated, enhancing security.

“Never hardcode AWS credentials in scripts. Use environment variables or AWS SSO instead.” — Cloud Security Expert

Troubleshooting Common AWS CLI Issues

Even experienced users run into problems with the AWS CLI. Understanding common errors and how to fix them can save you hours of frustration.

Most issues stem from misconfiguration, permission errors, or network problems. The CLI provides detailed error messages, but they can be cryptic if you don’t know what to look for.

Authentication and Permission Errors

One of the most frequent issues is InvalidClientTokenId or AccessDenied errors. These usually mean:

- The access key is incorrect or has been deleted.

- The IAM user doesn’t have the required permissions.

- The AWS region is misconfigured.

To fix this, re-run aws configure and double-check your keys. Use aws sts get-caller-identity to verify which user you’re logged in as.

If you’re using roles, ensure the trust policy allows the CLI to assume the role. You can assume a role using:

aws sts assume-role --role-arn arn:aws:iam::123456789012:role/MyRole --role-session-name CLI-SessionThen export the temporary credentials to your environment.

Handling Rate Limits and API Throttling

AWS services have API rate limits. If you run too many commands too quickly, you might hit these limits and receive ThrottlingException errors.

To avoid this:

- Add delays between requests using

sleepin scripts. - Use exponential backoff logic in automation.

- Leverage AWS CLI’s built-in retry mechanism, which automatically retries failed requests.

You can also monitor your usage in AWS CloudTrail and adjust your scripts accordingly.

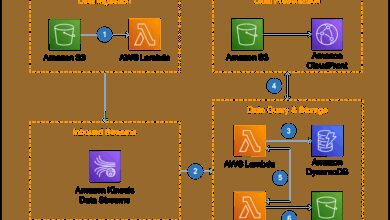

Integrating AWS CLI with DevOps Tools

The AWS CLI isn’t just a standalone tool—it’s a key component in modern DevOps ecosystems. It integrates seamlessly with popular tools like Jenkins, GitHub Actions, Terraform, and Ansible.

By embedding AWS CLI commands into your CI/CD pipelines, you can automate deployments, infrastructure provisioning, and monitoring.

Using AWS CLI in CI/CD Pipelines

In Jenkins or GitHub Actions, you can install the AWS CLI as part of your pipeline and use it to deploy applications.

For example, in a GitHub Actions workflow:

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

- name: Deploy to S3

run: |

aws s3 sync build/ s3://my-website-bucket --deleteThis workflow securely configures the AWS CLI and deploys a static site to S3.

Combining AWS CLI with Terraform and Ansible

While Terraform manages infrastructure as code, the AWS CLI can be used to inspect or debug resources. For example, after applying a Terraform plan, you can use aws ec2 describe-instances to verify that instances were created.

Similarly, Ansible can call AWS CLI commands using the command module, or use native AWS modules that wrap CLI functionality.

This hybrid approach gives you flexibility—using Terraform for declarative provisioning and the AWS CLI for imperative actions.

Future of AWS CLI and Emerging Trends

The AWS CLI continues to evolve alongside AWS services. With the rise of serverless, containers, and AI, the CLI is adapting to support new workflows and technologies.

AWS is investing in better developer experience, including improved documentation, auto-completion, and integration with cloud shells.

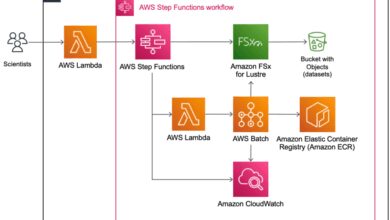

Serverless and Container Workflows

As more companies adopt Lambda and ECS, the AWS CLI is adding commands to streamline these workflows. For example:

aws lambda invoketo test functionsaws ecs update-serviceto deploy new container versionsaws ecr get-login-passwordto authenticate with Elastic Container Registry

These commands make it easier to manage modern architectures from the command line.

AI and Machine Learning Integration

AWS is expanding CLI support for AI services like SageMaker and Rekognition. You can now create training jobs, deploy models, and analyze images using CLI commands.

For example:

aws sagemaker create-training-job --training-job-name my-job --algorithm-specification ...This allows data scientists and ML engineers to automate model workflows without leaving the terminal.

As AI becomes more accessible, expect the AWS CLI to play a central role in democratizing machine learning operations.

What is AWS CLI used for?

The AWS CLI is used to manage Amazon Web Services from the command line. It allows users to control services like EC2, S3, Lambda, and IAM through commands, enabling automation, scripting, and infrastructure management without using the web console.

How do I install AWS CLI on Windows?

Download the MSI installer from the official AWS website, run it, and follow the prompts. After installation, open Command Prompt or PowerShell and run aws --version to verify it works.

Can I use AWS CLI with multiple accounts?

Yes. You can configure multiple named profiles using aws configure --profile profile-name. Switch between them by setting the --profile flag in commands or using environment variables.

Is AWS CLI free to use?

Yes, the AWS CLI itself is free. However, you are charged for the AWS services you use through it, such as EC2 instances, S3 storage, or Lambda invocations.

How do I update AWS CLI to version 2?

On macOS with Homebrew: brew upgrade awscli. On Windows: download the latest MSI installer. On Linux: pip install --upgrade awscli. Always check the official AWS CLI installation guide for the latest instructions.

Mastering the AWS CLI is a critical skill for anyone working in the cloud. From basic commands to advanced automation, it offers unparalleled control over your AWS environment. By following best practices in security, configuration, and integration, you can streamline operations, reduce errors, and build scalable, efficient workflows. Whether you’re a developer, DevOps engineer, or cloud architect, the AWS CLI is your gateway to cloud mastery.

Recommended for you 👇

Further Reading: